Unknown threaten subjects were observed for weapons v0The General Artificial Intelligence Tool (AI) from Vercel to develop fake entry pages that represent their legal counterpart.

“This observation signals a new evolution in weapons of generative II through threatening subjects that have demonstrated the ability to create functional phishing from simple text clues,” exploration researchers at the threatening Okta Houssem Eddine Bordjiba and Paula de La Hoz – Note.

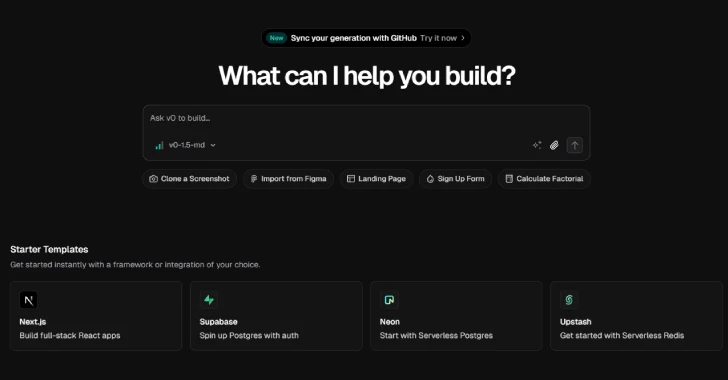

V0 – this AI’s proposal from Vercel that allows users to create Basic Target Pages and Full applications Use of a natural tongue clue.

Identity Services Services said she watched the scammers who use technology to develop convincing pages to enter several brands, including unnamed own customers. After the responsible disclosure of Vercel’s information, he blocked access to these phishing sites.

It was also found that the subjects of the company’s threats that take other resources, such as the detected logos of the Vercel infrastructure, probably seeking to abuse the confidence associated with the developer platform and detection of evasion, were found.

Unlike traditional phishing kits This requires a certain amount of effort to set, tools such as the V0 and its open source clones on GitHub-vomiting the attacker to promote the fake pages only by typing the hint. Rather, it is easier and does not require coding skills. This makes it easy for even low -skilled threatening subjects to build convincing phishing sites on a scale.

“The observed activity confirms that today’s threat actors are actively experimenting and armed leading Genai instruments to streamline and expand their phishing capabilities,” the researchers said.

“The use of a platform such as v0cel’s v0.dev allows you to strengthen the threat to the quickly produce quality, deceptive phishing pages, increasing the speed and scale of its activities.”

Development comes when bad actors continue to use large language models (LLM) to help their criminal activity by creating obscene versions of these models that are clearly designed for illegal purposes. One of these LLM, which has gained popularity in cybercrime landscape, is Whiterabbitneowhich advertises itself as “Without Censorship AI for (Dev) SECOPS teams”.

“Cybercrimations are increasingly striving for obscene LLM, LLMS, appointed cybercrime, and legal LLM,” Cisco Talos Jaeson Schultz researcher – Note.

“Excellent LLM is non -standard models that work without restrictions on the fence. These systems are happy to create sensitive, contradictory or potentially harmful output in response to users’ clues. As a result of obscene LLM is great for using cybercrime.”

This corresponds to the greater change we see: Phishing works on AI more than before. Fake electronic letters, cloned voices, even videos with depths appeared in social engineering attacks. These tools help the attackers rapidly scale, turning small scores into large, automated companies. It is not just about fooling users – it is about creating whole fraud systems.