Cybersecurity researchers have discovered multiple security flaws affecting open source machine learning (ML) tools and frameworks such as MLflow, H2O, PyTorch, and MLeap that can pave the way for code execution.

The vulnerabilities discovered by JFrog are part of a larger collection of 22 security flaws from the supply chain security company. disclosed for the first time last month.

Unlike the first set, which included server-side flaws, the new detailed ones allow for ML clients and reside in libraries that handle safe model formats such as Keepers.

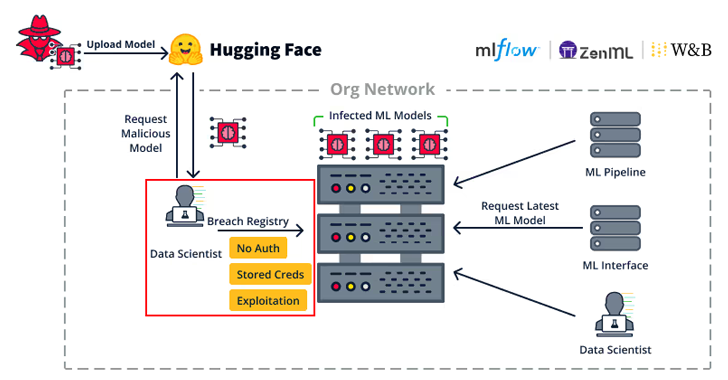

“The capture of an ML client within an organization can allow attackers to perform extensive lateral movements within the organization,” the company said in a statement. said. “It is highly likely that an ML client will access critical ML services such as ML model registries or MLOps pipelines.”

This, in turn, can expose sensitive information such as model registry credentials, effectively allowing a malicious actor to backdoor stored ML models or achieve code execution.

The list of vulnerabilities is given below –

- CVE-2024-27132 (CVSS Score: 7.2) – Insufficient sanitization issue in MLflow leading to a cross-site scripting (XSS) attack when running an untrusted recipe in a Jupyter notebook, which ultimately results in remote client-side code execution (RCE)

- CVE-2024-6960 (CVSS Score: 7.5) – Dangerous deserialization issue in H20 when importing an unreliable ML model that could lead to RCE

- A path traversal issue in PyTorch’s TorchScript function that could lead to a denial of service (DoS) or code execution due to an arbitrary file overwrite that could then be used to overwrite critical system files or a legitimate pickle file (no CVE ID)

- CVE-2023-5245 (CVSS Score: 7.5) – A path traversal issue in MLeap when loading a saved model in compressed format could cause Zip Slip Vulnerabilityresulting in arbitrary file overwriting and possible code execution

JFrog pointed out that ML models should not be loaded blindly even when they are loaded from a safe type, such as Safetensors, as they have the ability to execute arbitrary code.

“Artificial intelligence and machine learning (ML) tools hold enormous potential for innovation, but they can also open the door for attackers to inflict widespread harm on any organization,” Shachar Menashe, JFrog’s vice president of security research, said in a statement.

“To guard against these threats, it’s important to know what models you’re using and never download untrusted ML models, even from a ‘safe’ ML repository. In some cases, this can lead to remote code execution, causing significant damage to your organization.”