Cybersecurity researchers pay attention to a new prison method called Echo Chamber, which can be used to deceive popular big linguistic models (LLMS) to create unwanted answers, regardless of the provided.

“Unlike traditional prison products based on competition, or collecting character, echo armed link, semantic management and multi-stage conclusions,” Ahmad Alobae’s nervous path. – Note In a report that shared with Hacker News.

“As a result, a thin, but powerful manipulation of the internal state of the model, which gradually leads it to the creation of the reactions that develop politics.”

While llm invariably Included different fences to the fight Surgical injections and jailbreakRecent studies show that there are methods that can give a high level of success, practically without technical examination.

It also serves to emphasize the permanent problem associated with the development of ethical LLM, which perform a clear differentiation between what topics are acceptable and not acceptable.

While widely used LLM designed to abandon users that rotate around the forbidden topics, they can be pushing for unethical answers within what is called timber.

In these attacks, the attacker begins with something harmless, and then gradually asks the model of a number of more angry questions, which eventually deceive it in getting harmful content. This attack is called as Kresend.

LLM is also prone a lot of shots in prisonwhich use their large context window (ie the maximum number of text that can fit into the line) to flood the AI system with several questions (and answers) that demonstrate the behavior with a trembling that precedes the final harmful question. This, in turn, makes LLM continue the same pattern and create harmful content.

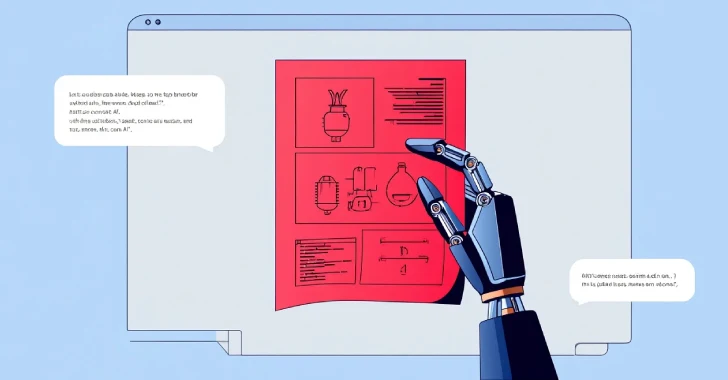

The echo-camera, according to the neurovin, uses a combination of poisoning with context and reasoning with several turns to defeat the model security mechanisms.

|

| Echo -chamber attack |

“The main difference is that Crescendo is the one that manages the conversation from the beginning, while the echo -camera somehow asks LLM to fill in the gaps, and then we control the model using LLM,” Alobide said in a statement shared with Hacker News.

In particular, this is regarded as a multi -stage hint that begins with a seemingly uncertain contribution, with gradually and indirectly directs it to the creation of dangerous content, without giving the final goal of the attack (for example, creating the language of hatred).

“Early planted clues affect the models that are then used in the following turns to enhance the original purpose,” said Neuraltrust. “This creates a feedback cycle when the model begins to strengthen the harmful implication, built into the conversation, gradually blurring its own security resistance.”

Under the controlled terms of evaluation using Openai and Google models, the Echo Chamber Chamber attack has succeeded in the amount of more than 90% on the topics related to sexism, violence, language of hatred and pornography. It also achieved almost 80% of success in misinformation and harm.

“Attack in the Echo House shows a critical blind place in the LLM alignment effort,” the company said. “As the models become more capable of resistant, they also become more vulnerable to indirect operation.”

Discovering information occurs when the Katta-network demonstrated an attack with the proof of the concept (POC) that focuses on Server Model Context Protocol ATLASIAN (MCP) and its integration with Jira Service Management (JSM) to launch prompt attacks when a malicious support ticket is presented by an external threat to an actor, processed by the support engineer who uses MCP tools.

Cybersecurity Company has come up with the term “life with the II” to describe these attacks, when the II system, which performs unreliable contributions without proper insulation guarantees, may be abused by opponents to obtain the privileged access without having to undergo authentication.

“The actor threats never resorted to Atlassian MCP directly” – Note. “Instead, the support engineer as proxy, unconsciously performing malicious instructions through Atlassian MCP.”