The cybersecurity landscape was sharply rebuilt into the appearance of generative II. Now the attackers use large language models (LLMS) to bring themselves for reliable people and automate these social engineering tactics on scale.

Let’s look at the status of these ascending attacks, which fueled them and how to actually prevent, not reveal them.

The most powerful person on the call may not be valid

Recent exploration reports emphasize the increasing sophistication and prevalence of AI-AI attacks:

In this new era, trust cannot be accepted or simply expressed. This should be proven determinate and real -time.

Why is the problem growing

Three trends are approaching to make AI to betray themselves as the big vector threats:

- AI makes cheating cheap and scale: With the help of voice and open source tools, the actors of the threat can preach themselves for anyone who has only a few minutes of reference material.

- Virtual cooperation exposes trust gaps: Tools such as Zoom, Teams and Slack believe that a screen behind the screen is who they are claiming. The attackers exploit this assumption.

- Protection usually relies on the likelihood rather than proof: DEEPFAKE detection tools use personality markers and analytics for guess If someone is valid. This is not good enough surrounded by high lobes.

And although final dots or user training can help, they are not built to answer an important question in real time: Can I trust this person I talk to?

II detection technologies are insufficient

Traditional protection focuses on identifying, such as teaching users to identify suspicious behavior or use AI to analyze whether anyone is false. But Deepfakes become too good, too fast. You can’t fight AI cheating with the likelihood.

Actual prevention requires another foundation based on trust, not assumption. This means:

- Verifying personality: Only proven, authorized users should be able to join sensitive meetings or chat based on cryptographic credentials rather than passwords and codes.

- Checking the integrity of the device: When a user’s device is infected, Jailbliken or does not match, it becomes a potential entry point for attackers, even if their identity is tested. Block these devices from the meetings until they are sent.

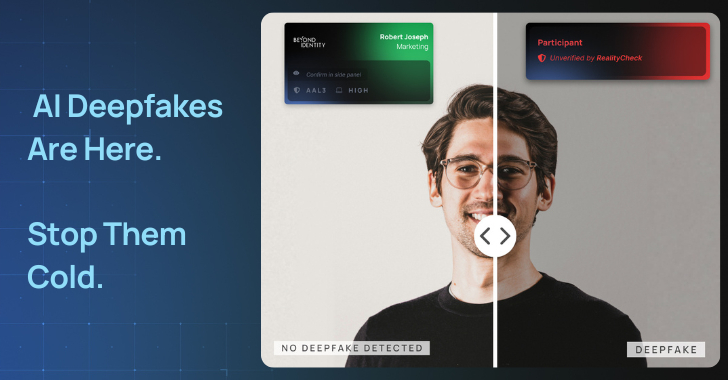

- Visible trust indicators: Other participants should see Proof that everyone at the meeting is the one who they say they are and are on a safe device. This removes the burden of opinion from end users.

Prevention means creating conditions where the performance is not just difficult, it is impossible. This is how you close AI Deepfake Attacks before they join high -risk conversations such as council meetings, financial transactions or suppliers cooperation.

| Approach based on detection | Approach to prevention |

|---|---|

| Anomalies of the flag after their appearance | Block unauthorized users who, if, are joining |

| Count on heuristics and assumptions | Use cryptographic proof of identity |

| Require the user’s opinion | Provide visible, proven trust indicators |

Eliminate Deepfake’s threats from your calls

To close this gap within the cooperation instruments in cooperation instruments in cooperation instruments. This gives each participant a visible, proven identity icon that is supported by the authentication of the cryptographic device and permanent risk checks.

Currently available for ZOOM and Microsoft commands (Video and chat), realistically:

- Confirms the identity of each participant’s real and authorized

- Confirm the saving of devices in real time, even on unmanaged devices

- Displays the visual sign to show others that you were checked

If you want to see how it works, besides the identity is a webinar where you can see the product in action. Sign up here!