Cybersecurity researchers have discovered security flaws in the The core of SAP AI a cloud-based platform for building and deploying artificial intelligence (AI) workflows that can be used to generate access tokens and customer data.

Five vulnerabilities were named together SAPwned from cloud security company Wiz.

“The vulnerabilities we discovered could have allowed attackers to access customer data and contaminate internal artifacts by spreading to related services and other customers’ environments,” security researcher Hilai Ben-Sasson said in a report shared with The Hacker News.

After responsible disclosure on January 25, 2024, SAP remedied the deficiencies as of May 15, 2024.

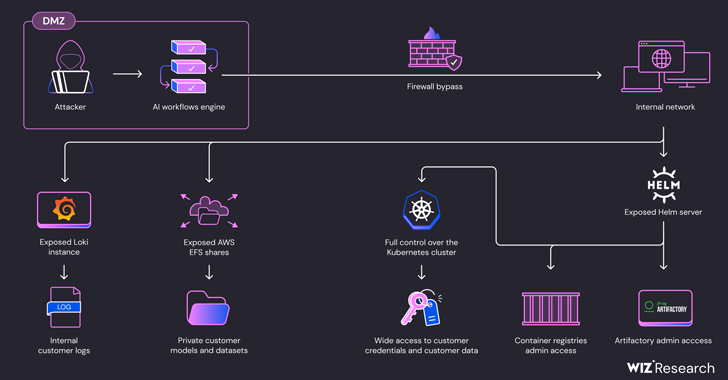

In a nutshell, the vulnerabilities allow unauthorized access to private artifacts and customer credentials in cloud environments such as Amazon Web Services (AWS), Microsoft Azure, and SAP HANA Cloud.

They can also be used to modify Docker images in SAP’s internal container registry, SAP’s Docker images in Google’s container registry, and artifacts hosted on SAP’s internal Artifactory server, leading to a supply chain attack on SAP AI Core services.

In addition, the access could be used to gain cluster administrator privileges on a Kubernetes cluster from SAP AI Core by exploiting the fact that the Helm package manager server was exposed to both read and write operations.

“Using this level of access, an attacker could gain direct access to other customers’ Pods and steal sensitive data such as models, datasets, and code,” Ben-Sasson explained. “This access also allows attackers to tamper with customer Pods, corrupt AI data, and manipulate model output.”

Wiz said the problems arise because the platform makes it possible to run malicious AI models and training routines without proper isolation and sandboxing mechanisms.

As a result, a threat actor could build a normal AI application on SAP AI Core, bypass network restrictions and probe the Kubernetes Pod’s internal network to obtain AWS tokens and access client code and training datasets using misconfigurations in the AWS Elastic File System (EFS). ) shares.

“Training AI by definition requires running arbitrary code; therefore, appropriate fences must be installed to ensure that untrusted code is properly separated from internal assets and other tenants,” Ben-Sasson said.

The findings come after Netskope revealed that the growing use of generative artificial intelligence in enterprises has pushed organizations to use lockout controls, data loss prevention (DLP) tools, real-time coaching and other mechanisms to reduce risk.

“Regulated data (data that organizations are required to protect by law) accounts for more than a third of sensitive data transferred to generative artificial intelligence (genAI) programs, putting businesses at potential risk of costly data breaches,” the company said in a statement. said.

They are also monitoring the emergence of a new cybercriminal threat group called NullBulge, which has targeted AI and gaming-focused organizations since April 2024 to steal sensitive data and sell compromised OpenAI API keys on underground forums, saying is a hacktivist group that “protects artists around the world” from artificial intelligence.

“NullBulge targets the software supply chain by weaponizing code in public repositories on GitHub and Hugging Face, forcing victims to import malicious libraries or via modpacks used in games and simulation software,” Jim Walter, SentinelOne security researcher. said.

“The group uses tools like AsyncRAT and XWorm before delivering LockBit payloads created using the leak LockBit Black builder. Groups like NullBulge present a constant threat of ransomware with a low barrier to penetration combined with the evergreen effect of hijacker infections.”